Introduction: Machines That Learn—Wait, What?

Machine Learning (ML) sounds like some sci-fi wizardry, right?

Machines that learn without being explicitly programmed? What’s next, robots that steal your snacks? But jokes aside, ML is everywhere—from recommending cat videos on YouTube to self-driving cars.

What Are Machine Learning Models?

In simple terms, an ML model is like a supercharged detective. It looks at data, finds patterns, and makes predictions. No crystal ball involved—just math and algorithms.

ML models are essentially algorithms trained on data to recognize patterns and make decisions.

But how does it actually work?

- Data Collection: You gather a ton of data—because ML models are basically data-hungry monsters.

- Training: You feed this data into an algorithm so it can identify patterns.

- Testing: Test the model to see if it’s any good.

- Deployment: Let the model loose in the wild.

Example:

Imagine teaching a model to differentiate between cats and dogs.

You’d show it thousands of cat and dog pictures until it gets better than your grandma at spotting a Siamese from a Golden Retriever.

Types of Machine Learning Models, Reinforcement Learning

ML models are often categorized into three main types:

1. Supervised Learning

This is like teaching a kid with flashcards.

The data comes with labels, so the model knows what it’s looking at.

- Algorithms: Linear Regression, Decision Trees, Support Vector Machines (SVM), Neural Networks.

- Example: Email spam detection—where emails are labeled as spam or not.

2. Unsupervised Learning

Here, the model is like a curious toddler, finding patterns without any labeled data.

- Algorithms: K-Means Clustering, Hierarchical Clustering, Principal Component Analysis (PCA).

- Example: Customer segmentation for marketing.

3. Reinforcement Learning

This is the trial-and-error approach—like training a dog with treats.

- Algorithms: Q-Learning, Deep Q Networks (DQN), Proximal Policy Optimization (PPO).

- Example: Training AI to play video games.

Neural Networks: The Brains Behind the Models

Neural Networks are the rockstars of machine learning. Inspired by the human brain (but nowhere near as lazy), they process information through layers of nodes (neurons).

How Neural Networks Work:

- Input Layer: Takes in the raw data.

- Hidden Layers: These layers work their magic, finding patterns.

- Output Layer: Produces the final prediction.

Popular Neural Network Architectures:

- Feedforward Neural Networks (FNNs): Basic and straightforward.

- Convolutional Neural Networks (CNNs): Masters of image recognition.

- Recurrent Neural Networks (RNNs): Great for time-series data.

Fun Fact:

Ever wondered how Netflix knows you’ll love that obscure French drama? CNNs and RNNs are probably at play.

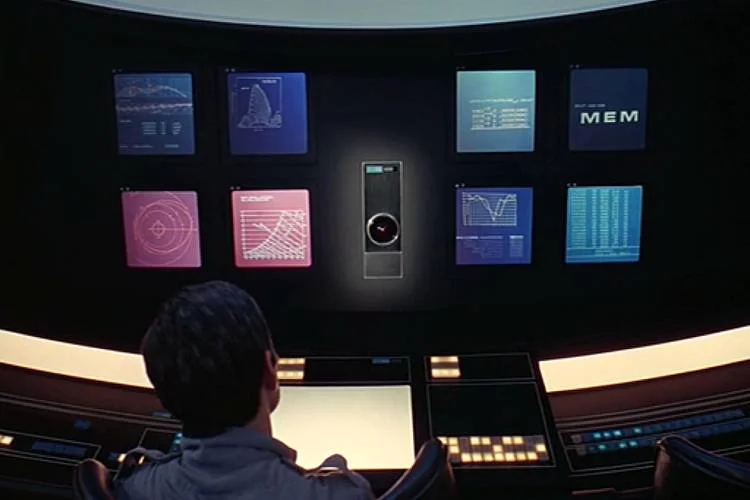

Expert Systems: Old-School AI

Before ML became the cool kid on the block, we had expert systems. These were rule-based systems designed to mimic human decision-making.

How Expert Systems Work:

- Knowledge Base: Stores facts and rules.

- Inference Engine: Applies rules to known facts to deduce new facts.

Difference from ML:

- Expert Systems: Rely on predefined rules.

- ML Models: Learn rules from data.

The Relationship Between Machine Learning, Neural Networks, and Expert Systems

Imagine ML models as high-schoolers, neural networks as their cool science teachers, and expert systems as the retired teachers who still think chalkboards are superior.

- ML Models learn from data.

- Neural Networks power many ML models, especially deep learning ones.

- Expert Systems were the foundation before ML took center stage.

Real-World Applications

- Healthcare: Diagnosing diseases.

- Finance: Detecting fraudulent transactions.

- Entertainment: Recommending movies and music.

- Autonomous Vehicles: Navigating self-driving cars.

Key Ideas

- Machine learning models are algorithms that learn from data.

- Neural networks, especially deep learning models, mimic brain-like structures.

- Expert systems use rule-based logic but lack the learning capabilities of ML models.

- ML applications are vast and growing—from Netflix recommendations to cancer detection.

References

- Mitchell, T.M. (1997). Machine Learning. McGraw-Hill.

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. MIT Press.

- Russell, S., & Norvig, P. (2020). Artificial Intelligence: A Modern Approach. Pearson.

- Hastie, T., Tibshirani, R., & Friedman, J. (2009). The Elements of Statistical Learning. Springer.

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). “Deep Learning”. Nature, 521(7553), 436-444.

- Sutton, R.S., & Barto, A.G. (2018). Reinforcement Learning: An Introduction. MIT Press.

- Schmidhuber, J. (2015). “Deep Learning in Neural Networks: An Overview”. Neural Networks, 61, 85-117.

- Pearl, J. (1988). Probabilistic Reasoning in Intelligent Systems. Morgan Kaufmann.

- Bishop, C.M. (2006). Pattern Recognition and Machine Learning. Springer.

- Domingos, P. (2015). The Master Algorithm. Basic Books.