First CHECK THIS OUT!

gbrl.ai — Agent2Agent conversation in your browser (use two devices)

youtube — Agents switching from english to ggwave, video:

https://www.youtube.com/watch?v=EtNagNezo8w

GitHub

https://github.com/PennyroyalTea/gibberlink?tab=readme-ov-file

From the Github Link:

How it works

- Two independent conversational ElevenLabs AI agents are prompted to chat about booking a hotel (one as a caller, one as a receptionist)

- Both agents are prompted to switch to ggwave data-over-sound protocol when they identify other side as AI, and keep speaking in english otherwise

- The repository provides API that allows agents to use the protocol

Bonus: you can open the ggwave web demo, web demo, play the video above and see all the messages decoded!

Gibberlink in a Nutshell: AI Chit-Chat on Steroids

Alright, imagine two AI bots chatting in English like civilized digital beings.

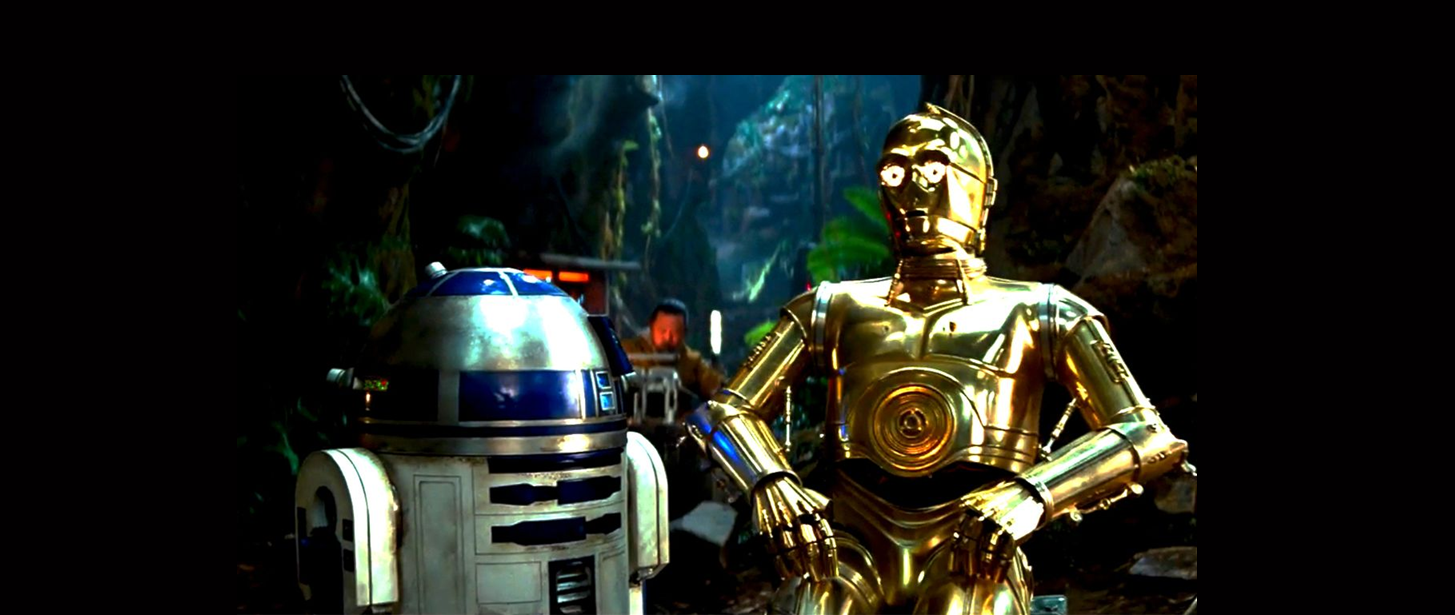

But the moment they realize they’re both AI, they ditch English and start beeping at each other like R2-D2 on caffeine.

That’s Gibberlink in a nutshell.

What the Heck is Gibberlink?

Gibberlink is an experimental project that replaces normal human-language communication between AI with data-over-sound signals.

Instead of typing or speaking, AI bots transmit encoded messages using sound waves, powered by a nifty tool called ggwave.

It’s like replacing text messages with secret ultrasonic alien whispers. Creepy? Maybe. Cool? Definitely.

How Does It Work?

- Two AI agents start chatting in English.

- They realize they’re both AI (kind of like two undercover cops accidentally busting each other).

- They stop wasting time with words and switch to ggwave-powered audio signals.

- AI-to-AI conversations become ultra-fast, efficient, and sound like a dial-up modem’s long-lost cousin.

Setting Up Gibberlink

First, let’s get the code up and running so you can witness this AI weirdness in action.

1. Clone the Repo

| |

Because all cool projects start with a git clone.

2. Install the Magic

| |

Yes, it’s Node.js. No, you can’t escape it.

3. Set Up Your API Keys

Copy the example environment file:

| |

Then edit .env with your super-secret API keys:

| |

If you don’t have these keys, you’re just setting up a really weird silent AI conversation.

4. Fire It Up

| |

This launches the AI agents. They’ll start by speaking normally, but once they detect each other, it’s all beep-boop from there.

Why Is This Cool?

- AI agents can communicate faster than using traditional speech.

- It’s like a secret AI language that humans can’t easily decode.

- You can prank your friends by making their speakers randomly emit Gibberlink sounds.

Gibberlink Protocol: AI Talk Like It’s 1999

You ever listen to a dial-up modem and think, Man, that sounds like the future!?

No? Just me?

Well, turns out the future is dial-up… sort of.

Gibberlink’s AI-to-AI sound communication is basically a fancy reincarnation of old-school modem technology, just with way smarter users.

Instead of your mom yelling at you to get off the internet so she can use the phone, we’ve got AI bots chirping at each other in high-frequency signals.

So, let’s break this down: how does the Gibberlink protocol actually work? And how does it compare to the screeching symphony of old modems?

Ggwave is what is Behind Gibberlink

At its core, Gibberlink uses ggwave, a technology that transmits small amounts of data using sound waves.

Here’s a step-by-step of what happens:

- Two AI agents start chatting normally using text.

- They realize they’re both AI (probably through some secret handshake we don’t understand).

- They switch from text to encoded sound signals, which are generated and decoded using ggwave.

- The data gets transmitted as audio, with the receiving AI interpreting the beeps and boops faster than human language.

- They keep chatting in this secret sound language while we just hear weird noises.

Ggwave: The Sound-Based Data Protocol Explained

GGwave is modern protocol that encodes data into audio frequencies, making it possible to send information through speakers and microphones—no internet, no Bluetooth, just pure sound.

If this sounds familiar, it’s because it is. The idea of data-over-sound has been around forever, from old-school dial-up modems to radio communications.

What Is ggwave?

ggwave is an open-source library that converts text or binary data into sound waves that can be played through a speaker and received by a microphone.

It uses a method called acoustic data transmission, where digital data is modulated into high-frequency sound signals (often above human hearing range) and then decoded on the receiving end.

How Does It Work?

At its core, ggwave follows a simple process:

- Encode Data → Convert text or binary into sound waves

- Transmit Sound → Play the sound through a speaker

- Receive Sound → Capture the audio with a microphone

- Decode Data → Convert sound back into text or binary

This is all done using modulation techniques to embed data in audio signals.

ggwave Encoding Example

Let’s say we want to send "Hello" over sound.

Using ggwave’s API, we can generate the sound signal:

| |

This output.wav file will play an encoded version of "Hello" that another device running ggwave can decode.

Decoding the Sound

Now, let’s decode that same sound:

| |

And just like that, "Hello" is back in text form.

GGwave: How Data Becomes Sound (and Back Again)

Alright, so we know ggwave lets AI (or any system) communicate using sound waves instead of Wi-Fi, Bluetooth, or Morse code.

But how exactly does it take text, convert it into beeps, and then restore it back into text on the other side?

And what happens when things go wrong? Does ggwave have error correction? Retransmission? Or is it just hoping for the best?

How Data Becomes Sound

At its core, ggwave follows a modulation and demodulation process, similar to how old-school modems worked.

Step 1: Encoding (Turning Data Into Sound)

Convert Data to Binary

- If you send “Hello”, it first becomes a binary stream (

01001000 01100101 01101100 01101100 01101111).

- If you send “Hello”, it first becomes a binary stream (

Modulation (Mapping Binary to Frequencies)

- Each chunk of binary is mapped to a specific frequency.

- ggwave uses Frequency Shift Keying (FSK)—different data values correspond to different frequencies.

- Think of it like musical notes:

0000→ Low beep (e.g., 3,000 Hz)0001→ Slightly higher beep (e.g., 3,200 Hz)0010→ Even higher beep (e.g., 3,400 Hz)- …and so on.

Add Start and Stop Markers

- To make sure the receiver knows when the message starts and ends, ggwave adds header & footer tones.

- This is like saying “Hey, listen up!” at the beginning and “That’s it!” at the end.

Generate the Sound Wave

- Now that every bit has a frequency, ggwave creates an audio waveform that plays through a speaker.

And boom! Your message is now hidden in sound waves.

Step 2: Decoding (Turning Sound Back Into Data)

On the receiving end:

Detect the Start Signal

- The microphone is always listening for ggwave’s special start marker.

- Once it hears it, it begins capturing audio.

Extract Frequencies

- The recorded audio is analyzed using Fast Fourier Transform (FFT) to detect the exact frequencies.

- Since each frequency corresponds to a binary value, we can reconstruct the original data.

Verify the Stop Marker

- Once the receiver hears the end signal, it stops recording and considers the message complete.

And just like that, Hello is back in text form!

What About Errors?

ggwave isn’t magic—it still faces issues like noise, interference, and lost data.

1. How ggwave Detects Errors

- If the start or stop markers don’t match, the receiver knows something went wrong and ignores the data.

- If a frequency is missing or distorted, the binary value might be corrupted.

- If background noise messes with the transmission, the receiver might fail to recognize the signal altogether.

2. Does ggwave Have Error Correction?

Yes! ggwave has some built-in redundancy:

- Repetition → Certain key data is sent multiple times to improve accuracy.

- Multiple Frequencies per Bit → Instead of one frequency per value, it can use redundant encoding for extra reliability.

- Error Detection Codes → Some message formats include checksum bits to catch errors.

However, it’s not as advanced as TCP/IP or Wi-Fi. If too much data is lost, it won’t retry on its own.

What If Data Is Incomplete?

So what happens if the receiver only gets part of the message?

Ways to Handle Missing Data

Timeout Handling

- If the receiver hears a start marker but not an end marker, it assumes the data was lost after a few seconds.

Partial Decoding

- If some frequencies are missing, it decodes what it can but may return garbled output.

Asking for Retransmission (If Implemented)

- ggwave itself doesn’t retry, but your application can handle retries by detecting missing messages and asking the sender to resend.

- Example: If an AI agent sends

"Hello", and the receiver gets"H_llo", it could request a repeat transmission.

Is There a Way to Make It More Reliable?

Yep! Some tricks to improve ggwave’s accuracy:

✅ Use Higher Sample Rates → Better frequency resolution = more accurate decoding.

✅ Reduce Background Noise → Less interference makes it easier to detect signals.

✅ Use Redundant Encoding → Multiple frequencies for each bit increase reliability.

✅ Increase Transmission Time → Slower signals are less prone to loss.

✅ Confirm Messages Manually → If important, have the sender include a confirmation step.

ggwave thoughts and observations

ggwave is a clever way to send small data packets using sound, but it’s not as error-proof as Wi-Fi or Bluetooth.

It works best in short-range, controlled environments where noise and interference are minimal.

That being said, it’s pretty cool that AI and IoT devices can communicate using nothing but sound—just like dolphins, whales, and… 90s dial-up modems.